I conducted this HCI research with myfriends

- UX Research

- Website Development

- UX Researcher

- Website Developer

- Research Designer

Learning from Multimedia-Enhanced Scientific Articles

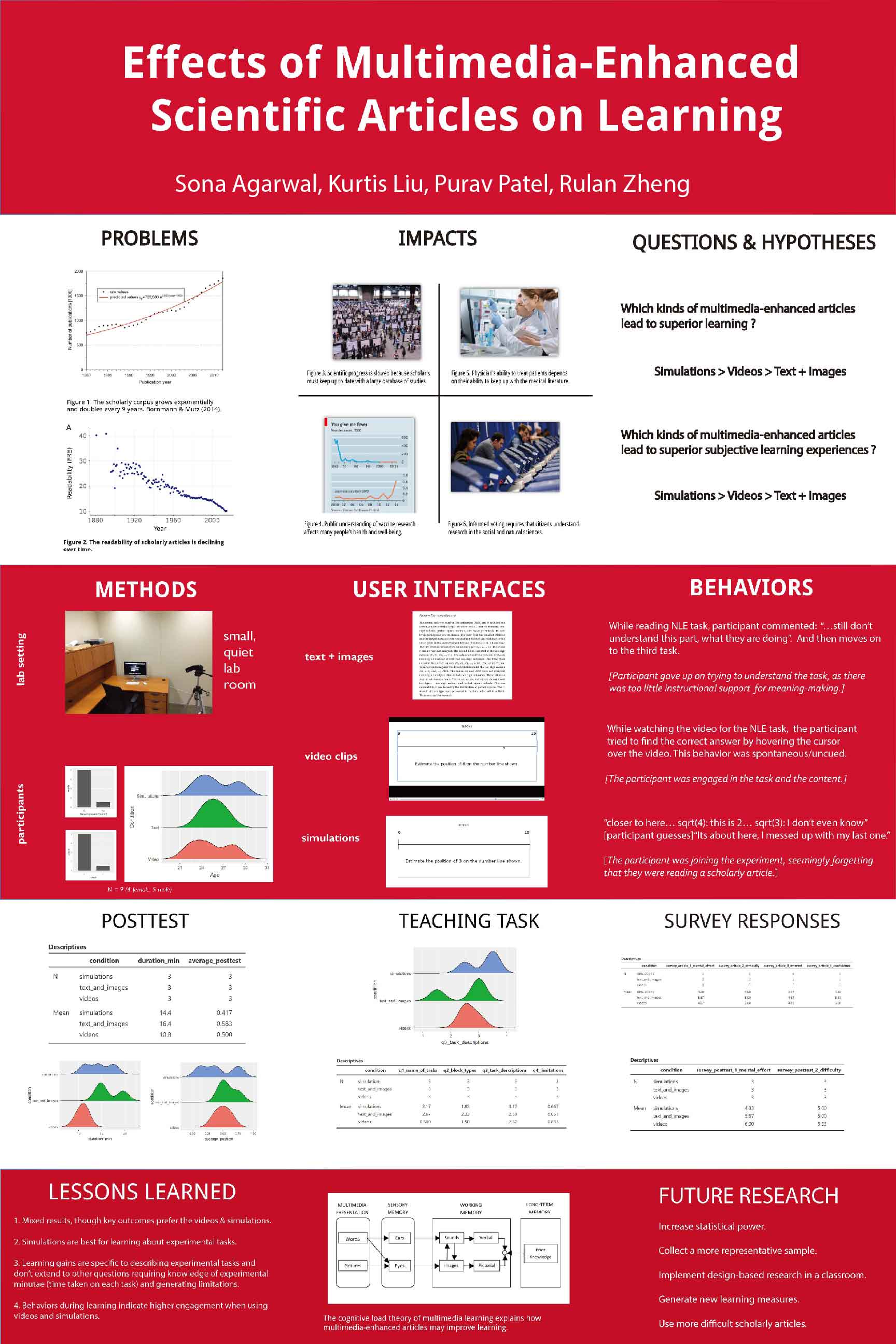

We asked two research questions. The first concerned the efficiency of learning:

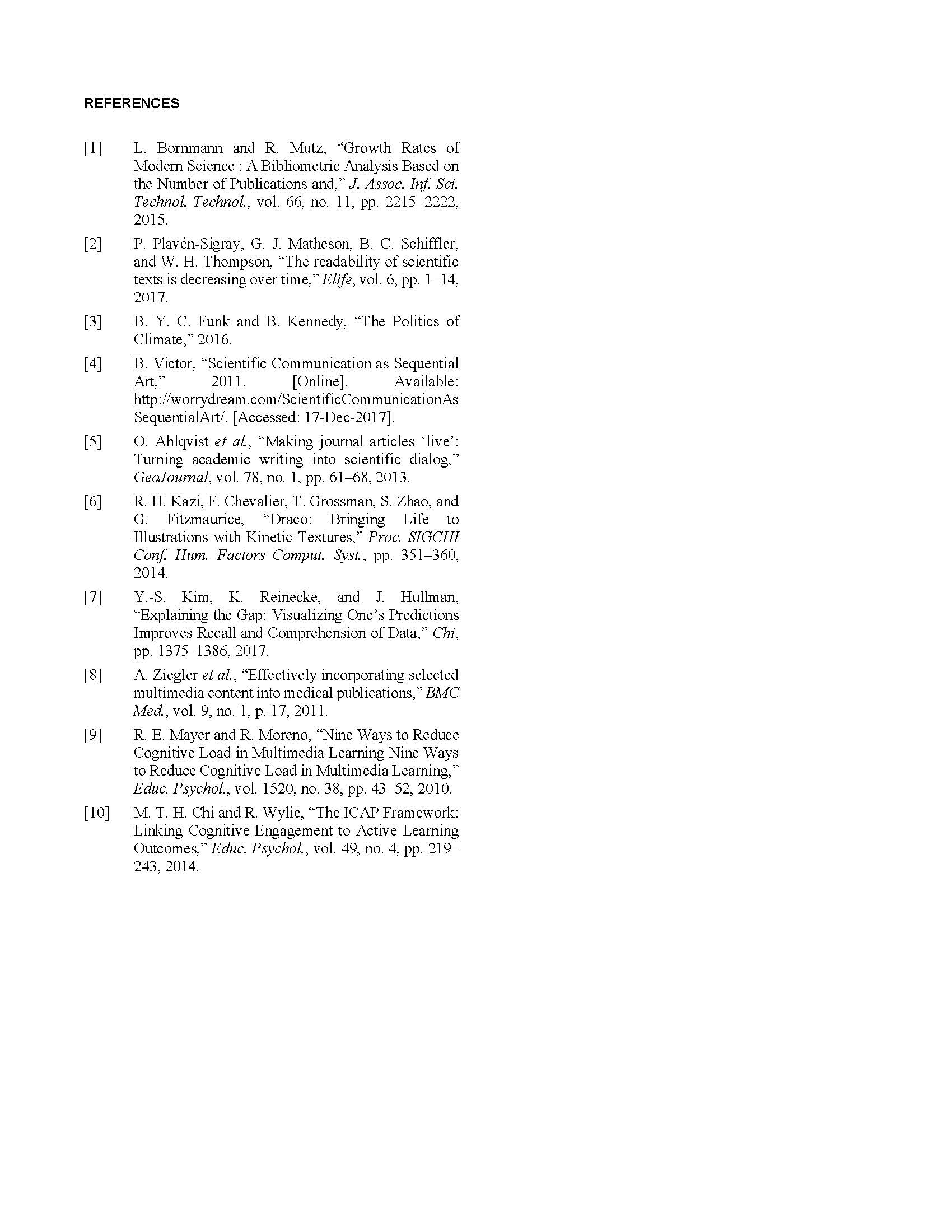

Peer-reviewed scholarly articles are the vehicles through which scientific discoveries are communicated, critiqued, and applied to real-world contexts. Whether they are published in print journals or hosted on websites, readers experience significant learning barriers. Consider, for instance, the difficulties faced when reading intricate methodologies. Articles often describe complex methods using only text and images. The short-term result is low comprehension that limits scientific learning; the long-term result is suboptimal scientific progress. We suggest that interactive simulations of experimental tasks interleaved with text may better convey methodological details. Here, we describe the results of a laboratory experiment wherein learners studied a scholarly publication via (1) text and images, (2) videos, or (3) interactive simulations of experimental tasks. Posttest scores and responses to a questionnaire revealed weak benefits for articles embedded with interactive simulations. Results are interpreted using the ICAP framework of cognitive engagement and the cognitive load theory of multimedia learning.

Static Version of Scholarly Article

Video Version of Scholarly Article

Simulation Version of Scholarly Article

Method

Setting

All experimental sessions were conducted in a small, quiet office repurposed as a laboratory room. Measures were taken to ensure that participants were comfortable in this setting. To avoid distractions, there was only one experimenter nearby at a time and the room was stripped of decorative elements like framed photos. Participants were seated in front an iMac computer with a keyboard and mouse placed on a large wooden desk (Figure 1). A camcorder mounted on a tripod was positioned to the side and angled to face only the monitor. The experimenter, who was always the first author, was seated at a smaller desk behind the participant and to the right.

Participants

A total of 9 graduate students (4 female, 5 male) were recruited from a large Midwestern university. They were recruited using convenience sampling. Most participants were volunteers from a graduate-level Human-Computer Interaction course. Others were co-workers and friends. Participants from the Human-Computer Interaction course were compensated with an offer to participate in their projects. Other participants were not compensated. Via demographics questionnaires, participants’ ages were measured (M = 25.6, SD = 2.2). All but one of the participants was enrolled in a major unrelated to the psychological sciences. Likewise, just one participant spoke English as their native language. Age, education, and linguistic experience were similar between conditions.

Experimental Design

Procedure

Participants were allotted one of the three conditions (interactive simulations, videos, and text & images) based on the order in which participants arrived. This was needed to yoke-control the time spent on the videos to the simulations. In other words, the durations of the videos matched the actual time participants spent on the simulations. Participants were briefly asked some questions to assess for relevant prior knowledge about the article’s content. These questions were a kind of quick oral “pre-test.” No participants self-reported understanding the experimental tasks used in the article or anything about the psychology of numerical cognition.

Afterward, participants were given an overview of the experiment. They were told the general purpose of the study and the expected durations for each part of the experiment.

Participants were allowed to ask questions and practice using a think-aloud procedure (i.e. mentally compute 26x34). They were told to verbally report the steps while problem-solving. Then, participants began the learning task – reading the article out loud and reporting any thoughts as they occurred. If participants fell silent for more than 10 seconds, they were prompted to report their thoughts.

Next, the posttest and survey items were administered to all participants. The critical item on the posttest was the teaching task. Participants pretended to teach the content of the article to their friend or relative while drawing the experimental tasks. Next, a demographics questionnaire was administered. Everyone was fully debriefed and thanked for participating.

Final Paper: Learning from Multimedia-Enhanced Scientific Articles

RESULTS

Time on the Learning Task

The average time spent on the learning task was computed across conditions (M = 22.7 min, SD = 7.8 min). Differences were found between conditions. Those in the simulations condition spent the most time (M = 28.6 min, SD = 9.5 min), whereas those in the videos condition spent less (M = 20.6 min, SD = 5.8 min). Participants in the text and images condition spent the least time (M = 19 min, SD = 6.6 min). This was expected given that additional multimedia content requires more time to process.

Behaviors on the Learning Task

Think-aloud verbalization and actions during the learning task were analyzed to explore learning processes. We found that those using text + images reached impasses during reading. For instance, one participant said “…still don’t understand this part, what they are doing.” Following this, they moved onto the third task. This indicates that participants had too little insight into the nature of the experimental tasks in the interface resembling a traditional scholarly article. In contrast, one participant in the videos condition watching many videos actively. They moved their mouse toward what they believed to be the correct answer. This behavior was spontaneous (not cued by the experimenter). The highest level of engagement was displayed for participants given simulations. Consider what one participant in this condition said “closer to here… sqrt(4): this is 2… sqrt(3): I don’t even know” [participant guesses]“It’s about here, I messed up with my last one.” We interpret this to mean that the participant was experiencing the experimental task and having learning experiences qualitatively different from participants in the other conditions.

Accuracy on Verbatim Items

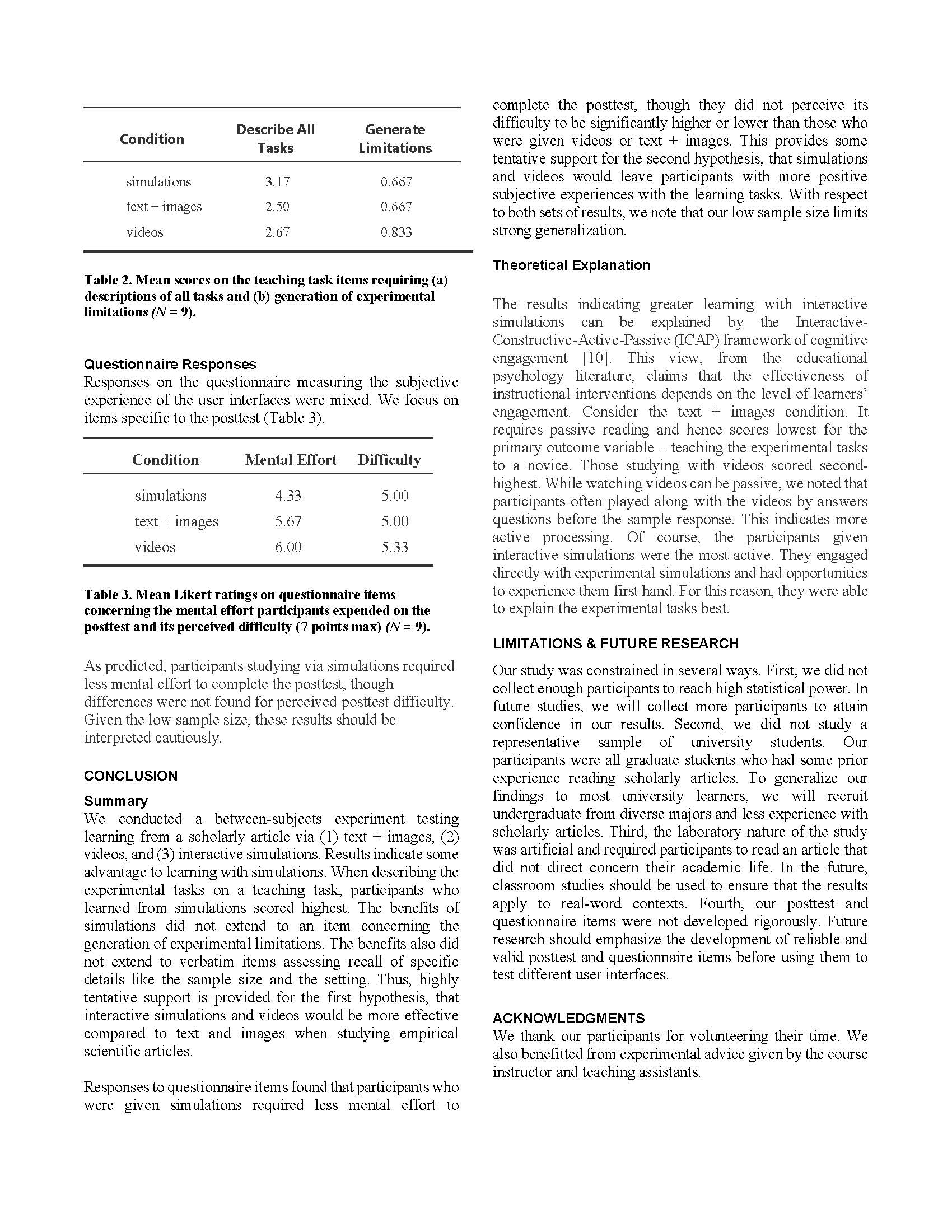

For the verbatim items (focused on recalling experimental minutiae), the lowest score was for those studying with simulations (M = 41.7%). Those using videos scored higher (M = 50%) while participants given the text + images articles performed best (M = 58.3%). These results contradict the first hypothesis, though the small sample size limits any generalization. Given the sample size, we did not conduct inferential statistical tests (Table 1).

Accuracy on the Teaching Task

For the teaching task, participants verbalizations and drawings were analyzed to determine how well they understood the experimental tasks. The first two co-authors coded all nine recordings of the teaching sessions. Interrater agreement was 100%. Those studying simulations received the highest scores (M = 3.17). Those studying videos performed second highest (M = 2.67). Finally, participants given only text + images scored lowest (M = 2.50). This pattern supports the first hypothesis, particularly considering that the use of simulations was intended to improve how well participants understood the experimental tasks. Importantly, the advantage for simulations was specific to describing the tasks. It did not extend to an item focused on generating limitations (Table 2).

Questionnaire Responses

As predicted, participants studying via simulations required less mental effort to complete the posttest, though differences were not found for perceived posttest difficulty. Given the low sample size, these results should be interpreted cautiously.

Conclusion

Summary

We conducted a between-subjects experiment testing learning from a scholarly article via (1) text + images, (2) videos, and (3) interactive simulations. Results indicate some advantage to learning with simulations. When describing the experimental tasks on a teaching task, participants who learned from simulations scored highest. The benefits of simulations did not extend to an item concerning the generation of experimental limitations. The benefits also did not extend to verbatim items assessing recall of specific details like the sample size and the setting. Thus, highly tentative support is provided for the first hypothesis, that interactive simulations and videos would be more effective compared to text and images when studying empirical scientific articles.

Responses to questionnaire items found that participants who were given simulations required less mental effort to complete the posttest, though they did not perceive its difficulty to be significantly higher or lower than those who were given videos or text + images. This provides some tentative support for the second hypothesis, that simulations and videos would leave participants with more positive subjective experiences with the learning tasks. With respect to both sets of results, we note that our low sample size limits strong generalization.

Theoretical Explanation

The results indicating greater learning with interactive simulations can be explained by the Interactive-Constructive-Active-Passive (ICAP) framework of cognitive engagement [10]. This view, from the educational psychology literature, claims that the effectiveness of instructional interventions depends on the level of learners’ engagement. Consider the text + images condition. It requires passive reading and hence scores lowest for the primary outcome variable – teaching the experimental tasks to a novice. Those studying with videos scored second-highest. While watching videos can be passive, we noted that participants often played along with the videos by answers questions before the sample response. This indicates more active processing. Of course, the participants given interactive simulations were the most active. They engaged directly with experimental simulations and had opportunities to experience them first hand. For this reason, they were able to explain the experimental tasks best.

Get codes at: Github

Visit our static article website at: Static Version

Visit our video article website at: Video Version

Visit our simulation article website at: Simulation Version

Ask us any questions at: 96rulanzheng@gmail.com

Questions?

Poster Session